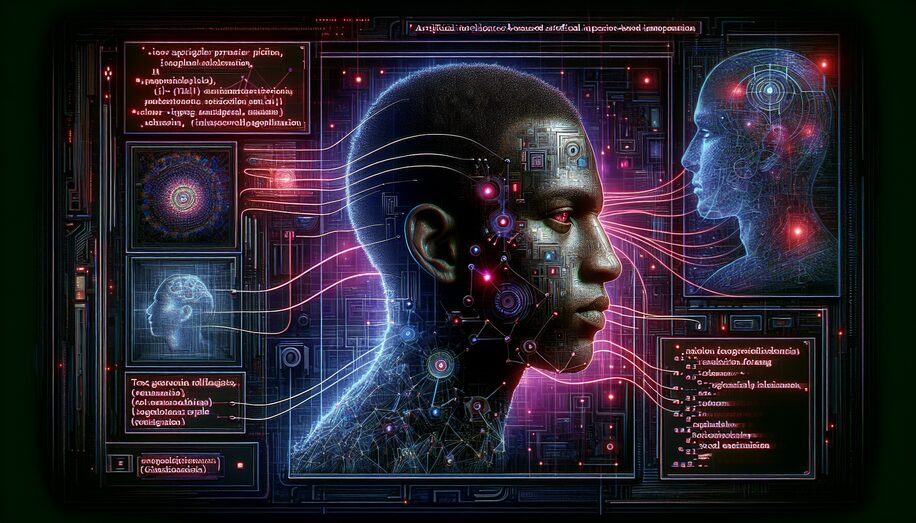

Is AI About to Cause a Fraud Crisis? OpenAI’s CEO Thinks So

Why Impersonation by AI Has Sam Altman Concerned

Hey there! You know how sci-fi movies often depict AI as a double-edged sword — full of potential but also a bit scary? It turns out, that’s not just Hollywood drama. Sam Altman, the CEO of OpenAI, recently gave a shoutout about a looming issue we might face: a fraud crisis fueled by artificial intelligence. Crazy, right?

The problem mainly revolves around AI getting so advanced that it could help bad actors pull off impersonations like never before. Imagine someone faking voices, writing styles, or even entire personas, all thanks to AI. Sounds like a plot from the movies, but here we are discussing it over our morning coffee! Let’s dive deeper.

A New Breed of Impersonators?

Altman’s concerns aren’t unfounded. With AI advancements shooting through the roof, it’s becoming alarmingly easy for folks with less-than-honest intentions to impersonate just about anyone. We’re talking about:

- Deepfake audio and video that mimic real people convincingly.

- AI-generated texts that can imitate the writing style of individuals.

- Enhanced social engineering tactics benefiting from these AI tools.

It’s as if an impersonator walked into a high-tech costume store and came out indistinguishable from the real person. Spooky, right?

The Fine Line Between Good and Evil in AI

Here’s the kicker: the technology itself isn’t inherently bad. In fact, AI has tons of positive uses. It can assist in creating art, streamline tasks, or even help in healthcare. The issue arises when it’s misused. We’re basically looking at the same toolset, just in the wrong hands.

Consider it like the internet — a source of immense knowledge and connectivity, yet also a platform for misinformation and scams. AI is following a similar pattern, but potentially on an even grander scale. And this makes folks like Sam Altman understandably nervous.

How Do We Tackle This?

So, what’s the plan? Altman and other leaders in tech suggest some proactive strategies:

- Regulation and Oversight: Governments might need to step up and create guidelines and regulations to prevent misuse.

- AI Literacy: Increasing public awareness about AI’s capabilities and limitations could be crucial. The more we know, the better we can guard against potential scams.

- Tech Evolution: Developing AI that can detect and counter malicious AI could be an internal solution, fighting fire with fire, but constructively.

It’s all about staying ahead of the curve and being prepared. Like having a first-aid kit for technology snafus!

The Coffee Table Conclusion

Chatting about AI impersonation isn’t just a theoretical exercise anymore. As we enjoy the marvels of advanced technology, we also need to keep an eye out for the challenges that come with it. Altman’s warnings give us food for thought: how do we balance innovation with responsibility?

The world of AI is exciting, no doubt. But it’s also one where we need to tread carefully. Kind of like walking through an exotic forest; beautiful, but you keep your eyes peeled for any lurking surprises. So, next time we read a suspicious email from a “friend” or watch a viral video that seems too outrageous to be true, we might just need to ask ourselves — is this the work of a sneaky AI?

Feel free to drop your thoughts on this, as it’s a topic that affects us all — gamer, techie, or just your everyday internet user. Here’s to hoping our favorite sci-fi plot remains fiction, mostly!

Leave a Reply